Optimizing I/O throughput

We got a customer request about performance issues they were seeing on startup on a particular set of machines.

Those machine run in a cloud environment, and they have… peculiar, one might say deviant, I/O characteristics. In particular, the I/O pipeline on those machines is wide, but very slow. What do I mean by that? I meant that any particular I/O operation on those is likely to be slow, but the idea is that you can get much better performance if you issue concurrent I/O. The system is supposed to be able to handle that much better, and overall you’ll see the same relative performance as elsewhere.

This is pretty big issue for us, because for many things, we really do care about serial I/O performance. For example, if we are committing a transaction, we really have no other way to handle it except to wait until the I/O is fully completed.

That said, the particular scenario where we had the problem was startup. If the database was under heavy load at the time it shut down, the recovery logs would be full, and the database would need to replay the recent actions that happened. Note that shutdown performance is important, because it many cases we are running in an environment where shutdown comes with a ticking clock (in IIS or as a Windows Service).

At startup, we usually have more time, and it is expected that we’ll take a while to get up to speed. If nothing else, just bringing enough of the database to memory is going to take time, so on large databases, startup time is expected to be non trivial.

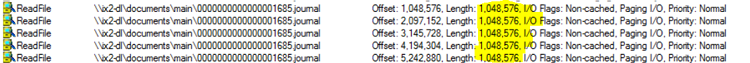

That said, the startup time on those set of machines was utterly atrocious. To figure out what is going on, I pulled out Process Monitor and looked at the File I/O. We go this:

We are reading from a journal, and that is pretty serial I/O (in the image, I’m running of a remote network drive, to simulate slow responses). Note that we need to read the log in a serial fashion, and the way the OS reads things, we read 32Kb at a time.

Remember, we are reading things in a serial fashion, and that means that we have a lot of page faults, and we have a slow I/O system, and we execute them serially.

Yes, that is a killer for perf. By the way, when I’m talking about slow I/O system, I’m talking about > 0.5 MS per disk read for most requests (ideally, we would have latency of 0.05 – 0.15). And we have quite a few of those, as you can imagine.

Since I know that we are going to be reading the whole journal, I used the PrefetchVirtualMemory() method and passed it the entire file (it is a maximum of 64MB, and we are going to need to read it all anyway). This let the OS have the maximum amount of freedom when reading the data, and it generate big, concurrent I/O. Here is how this looks like:

This also give the wide I/O bandwidth a chance to play. We load the I/O subsystem with a lot of stuff that it can try to do in an optimized fashion.

The next part that was expensive was that we need to apply the data from the journal files to the data file, and sync it.

The performance of syncing a file is related to the size of the file, unfortunately. And the file in question was large, over 45GB. Especially on such a system, we saw a lot of latency here, as in multiple minutes. One obvious optimization was to not sync per journal file, but sync once per the whole recovery process. That helped, but it was still too expensive.

Next, we tried pretty much everything we could think about.

- Switching to WriteFile (from using mmap and then calling FlushViewOfFile)

- Using async I/O (WriteFileEx)

- Using scatter / gather I/O with no buffering (saves the need to do sync in the end)

- Completion ports

- Asking a 4 months old baby girl what she think about it (she threw up on the keyboard, which is what I wanted to do at the time, then she cried, and I joined her)

Nothing seems to have worked. The major issue was that in this workload, we have a large file (45GB, as I said) and we are writing 4KB pages into it in effectively random places. In the workload we were trying to work with, there were roughly 256,000 individual 4KB writes (most of them weren’t consecutive, so we couldn’t get the benefit of that). That is about 1 GB of writing to do.

And nothing we could do would get us beyond 3MB/sec or so. Saturating the I/O subsystem with hundreds of thousands of small writes wouldn’t work, and we were at a loss. Note that a small test we made, just copying data around manually has resulted in roughly 10MS/sec peek performance on those machines. This is a very lame number, so there isn’t much that we can do.

Then I thought to ask, why are we seeing this only during startup? Surely this happens also on a regular basis. Why didn’t we notice?

The reason for that is pretty simple, we didn’t notice because we amortize the cost. Only on startup did we had to actually sit and wait for it to complete. So we dropped that requirement. We used to read all the journals, apply them to the data file, sync the data files and then delete the journals. Now we read the journals, apply them (via a memory map) to the data file, and only remember what is the last journal file we applied in memory.

There is a background process running that will take care of syncing the data file (and deleting the old journals). If we crash again, we’ll just have to replay the logs that we aren’t sure were synced before. This saves even more time.

But we still have another issue. Writing to memory mapped file require the OS to page the relevant pages into memory. And again, we are on slow I/O, and the OS will only page the stuff that we touch, so this is again a serial process that this time require us to load to memory about 1GB of data at 3MB/sec. That is… not a good place to be at. So the next step was to figure out all the addresses we’ll be writing to, and letting the OS know that we’ll be fetching them. We do some work to make sure that we load those values (and neighboring pages) to memory, then we can write to them without paging for each page individually.

A nice side effect of this is that because this is running on the latest changes in the system, this has the effect of preloading to memory the pages that are likely to be in used after the database has started.

That is a lot of work, but to be perfectly frank, this is mostly optimizing in a bad environment. The customer can’t walk away form their current machine easily, but the I/O rates those machines have would make any database sit in a corner and cry.

| Reference: | Optimizing I/O throughput from our NCG partner Oren Eini at the Ayende @ Rahien blog. |